Working with LLMs is shifting from human-machine interactions to human-machine and machine-machine interactions. This allows LLMs to do ever more complex tasks. This new interactivity has been coined as AI agent.

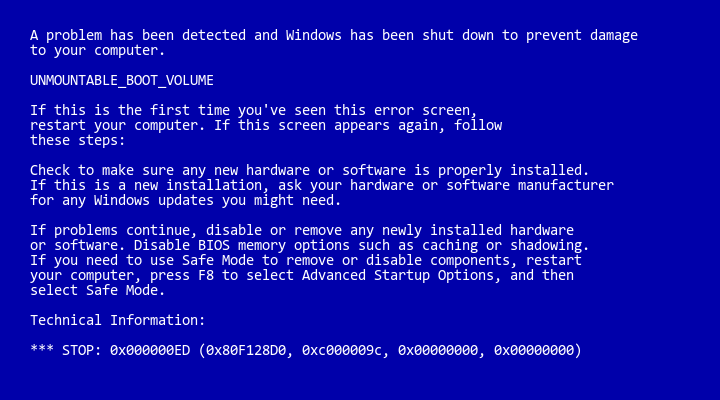

Threaded conversations lack structure to complete complex tasks. Therefore, objective divergence is a common issue with AI agents. Objective divergence is the equivalent of a blue screen in traditional computers.

I’m currently researching how to make agents more reliable mainly by providing structure and constraints. Here is a prompt that the MAC-1 agent easily does in a few seconds: “Find flights and accommodation for my next trip to Barcelona”

Note what the agent does:

- It detects the intent(s) in the prompt.

- It interacts with the user to get more information.

- It’s stateful so is aware of previous actions.

- It chains actions.

- It operates a browser.

- It’s able to map natural language into specific formats: “next Friday” -> “26-01-2024”.

I’m actively working on this and I’ll share more. Follow me on X.